The overarching research goal is to understand neurocognitive processes underlying dynamic interactions between human visual perception and goal-directed action. Sometimes, visual perception and action can be arbitrarily linked based on what we have learned over and over again (e.g., seeing “red” from a traffic light, we “stop” and seeing “green,” we “keep moving”). However, some functional links between perception and action can be somewhat implicit (and automatic) and hard-wired. For example, seeing a group of people with angry faces is naturally associated with avoidance actions, whereas people with happy, friendly faces tend to motivate approach actions. My research investigates how different brain parts coordinate countless connections between perception and action to mediate motor behaviours in various physical and social contexts. I also study neurocognitive bases of deficient perception-action links in impaired visuomotor coordination skills (as observed in many visual or motor deficits) or maladaptive social behaviours (e.g., excessive avoidance behaviour, which is a hallmark of many emotional disorders). My research employs psychophysics and neuroimaging methods, such as MEG (magnetoencephalography) and functional MRI in adults and children with typical and atypical brain development.

Current research

- Temporal dynamics of neural communication underlying perception-action link: Face perception naturally triggers goal-directed actions based on social, behavioural motivations to either ‘approach for more exploration’ or ‘avoid danger.’ For example, seeing angry mobs or panicked crowds will alert us to potential dangers and motivate immediate avoidance behaviours. Detection of threats from others’ faces must occur rapidly, since delays are often costly and maladaptive. This project examines groups of brain regions engaged in action-oriented (and time-sensitive) visual processing and timing of neural computation that triggers immediate connections between vision and action. We also examine the effects of anxiety on the functional interactions among widely distributed brain regions underlying the integration of visual perception, emotion, and goal-directed action.

- Visual perception of ensembles and objects facilitated by different action goals: This project examines how the human brain is wired up to mediate different units of perception - individual objects and ensembles - as a means of managing efficient and flexible descriptions of the visual world. The visual system quickly extracts a higher-order summary description (e.g., average, variance, or numerosity of sets) from an image containing many objects, while it also perceives a few objects as separate entities at the same time. The two different representations provide complementary visual information about parts and the whole of an image. Using fMRI, MEG, and psychophysics, we examine neural computations underlying the different types of perception. We also investigate when the brain selectively prioritizes global, ensemble perception over the individual object or the other way around, depending on the current action goal.

- Altered brain network connectivity in children with developmental disorders: This project examines different patterns of functional brain network connectivity in children with atypical brain development, including those with amblyopia or dyslexia, compared to typically developing children.

-

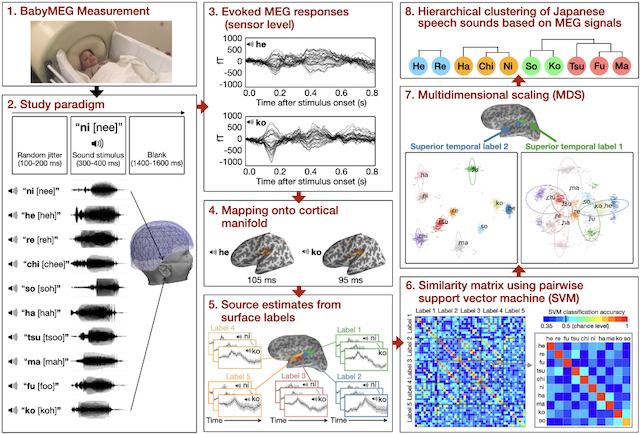

MEG decoding of children’s brains: This decoding project is to discover brain dynamics in perceiving spoken languages during the early period of life (6 months - 4 years). Based on our preliminary MEG decoding data, we expect to be able to characterize fine-scale time courses of different Japanese speech sounds encoded across the superior temporal plane of the children’s brains. We can also determine functional brain connectivity emerging in children with vs. without previous exposure to the Japanese language. In addition to contributing to developmental neuroscience, our work may provide valuable information for speech-language pathology and designing a device for replaying spoken speech sounds encoded in the brain to assist children with hearing or specific language impairments. We also hope to develop and extend this approach to test other perceptual abilities, including understanding facial expressions and reading other’s actions and intentions.